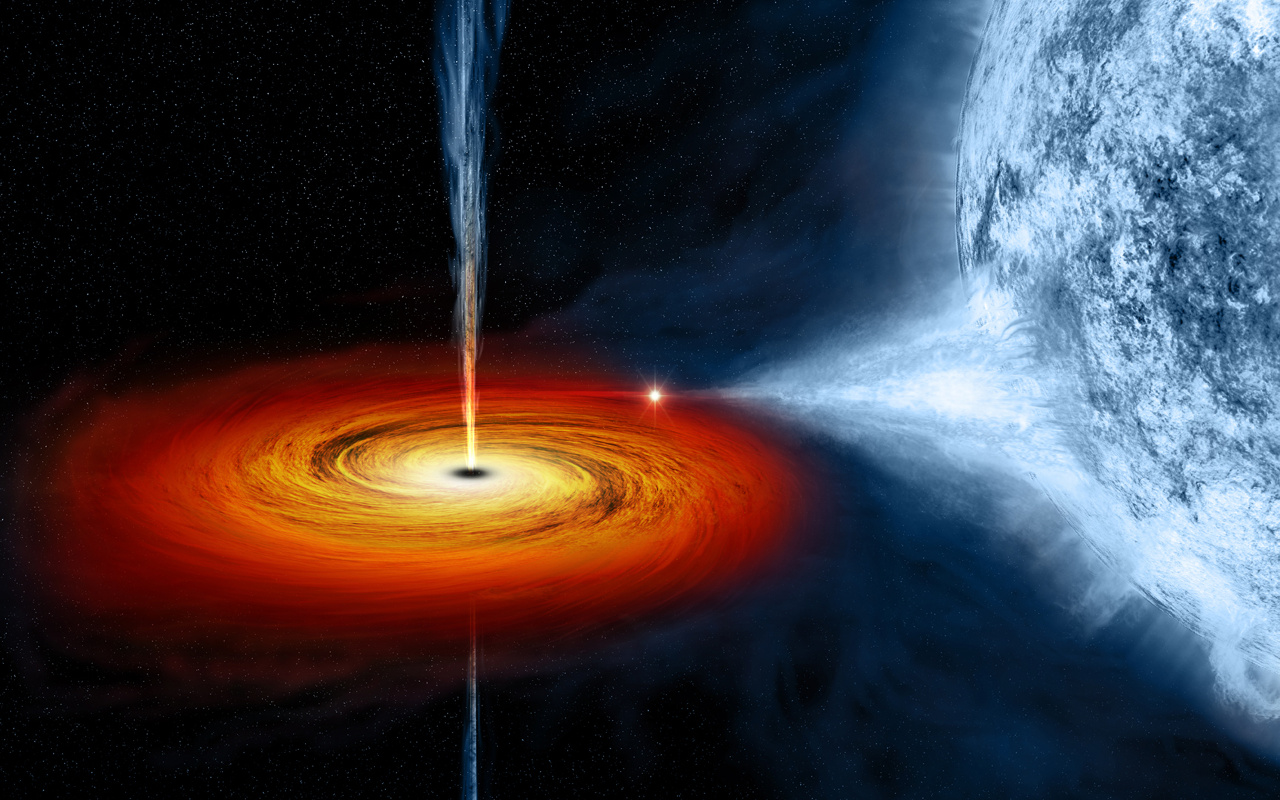

The algorithm would stitch together data collected from radio telescopes scattered around the globe, under the auspices of an international collaboration called the Event Horizon Telescope. The project seeks, essentially, to turn the entire planet into a large radio telescope dish.

“Radio wavelengths come with a lot of advantages,” says Katie Bouman, an MIT graduate student in electrical engineering and computer science, who led the development of the new algorithm. “Just like how radio frequencies will go through walls, they pierce through galactic dust. We would never be able to see into the centre of our galaxy in visible wavelengths because there’s too much stuff in between.”

But because of their long wavelengths, radio waves also require large antenna dishes. The largest single radio-telescope dish in the world has a diameter of 1,000 feet (300 metres), but an image it produced of the Moon, for example, would be blurrier than the image seen through an ordinary backyard optical telescope.

“A black hole is very, very far away and very compact,” Bouman says. “It’s equivalent to taking an image of a grapefruit on the Moon, but with a radio telescope. To image something this small means that we would need a telescope with a 10,000-kilometre diameter, which is not practical, because the diameter of the Earth is not even 13,000 kilometres.”

The solution adopted by the Event Horizon Telescope project is to coordinate measurements performed by radio telescopes at widely divergent locations. Currently, six observatories have signed up to join the project, with more likely to follow.

But even twice that many telescopes would leave large gaps in the data as they approximate a 10,000-kilometre-wide antenna. Filling in those gaps is the purpose of algorithms like Bouman’s.

Bouman will present her new algorithm — which she calls CHIRP, for Continuous High-resolution Image Reconstruction using Patch priors — at the Computer Vision and Pattern Recognition conference in June. She’s joined on the conference paper by her advisor, professor of electrical engineering and computer science Bill Freeman, and by colleagues at MIT’s Haystack Observatory and the Harvard-Smithsonian Center for Astrophysics, including Sheperd Doeleman, director of the Event Horizon Telescope project.

The Event Horizon Telescope uses a technique called interferometry, which combines the signals detected by pairs of telescopes, so that the signals interfere with each other. Indeed, CHIRP could be applied to any imaging system that uses radio interferometry.

Usually, an astronomical signal will reach any two telescopes at slightly different times. Accounting for that difference is essential to extracting visual information from the signal, but the Earth’s atmosphere can also slow radio waves down, exaggerating differences in arrival time and throwing off the calculation on which interferometric imaging depends.

Bouman adopted a clever algebraic solution to this problem: If the measurements from three telescopes are multiplied, the extra delays caused by atmospheric noise cancel each other out. This does mean that each new measurement requires data from three telescopes, not just two, but the increase in precision makes up for the loss of information.

Preserving continuity

Even with atmospheric noise filtered out, the measurements from just a handful of telescopes scattered around the globe are pretty sparse; any number of possible images could fit the data equally well. So the next step is to assemble an image that both fits the data and meets certain expectations about what images look like. Bouman and her colleagues made contributions on that front, too.

The algorithm traditionally used to make sense of astronomical interferometric data assumes that an image is a collection of individual points of light, and it tries to find those points whose brightness and location best correspond to the data. Then the algorithm blurs together bright points near each other, to try to restore some continuity to the astronomical image.

To produce a more reliable image, CHIRP uses a model that’s slightly more complex than individual points but is still mathematically tractable. You could think of the model as a rubber sheet covered with regularly spaced cones whose heights vary but whose bases all have the same diameter.

Fitting the model to the interferometric data is a matter of adjusting the heights of the cones, which could be zero for long stretches, corresponding to a flat sheet. Translating the model into a visual image is like draping plastic wrap over it: The plastic will be pulled tight between nearby peaks, but it will slope down the sides of the cones adjacent to flat regions. The altitude of the plastic wrap corresponds to the brightness of the image. Because that altitude varies continuously, the model preserves the natural continuity of the image.

Of course, Bouman’s cones are a mathematical abstraction, and the plastic wrap is a virtual “envelope” whose altitude is determined computationally. And, in fact, mathematical objects called splines, which curve smoothly, like parabolas, turned out to work better than cones in most cases. But the basic idea is the same.

Prior knowledge

Finally, Bouman used a machine-learning algorithm to identify visual patterns that tend to recur in 64-pixel patches of real-world images, and she used those features to further refine her algorithm’s image reconstructions. In separate experiments, she extracted patches from astronomical images and from snapshots of terrestrial scenes, but the choice of training data had little effect on the final reconstructions.

Bouman prepared a large database of synthetic astronomical images and the measurements they would yield at different telescopes, given random fluctuations in atmospheric noise, thermal noise from the telescopes themselves, and other types of noise. Her algorithm was frequently better than its predecessors at reconstructing the original image from the measurements and tended to handle noise better. She’s also made her test data publicly available online for other researchers to use.